Authors

Raymond R. Bond1, Khaled Rjoob1, Dewar Finlay1, Victoria McGilligan2, Stephen J Leslie3, Charles Knoery3, Aleeha Iftikhar1, Anne McShane4, Irina Andra Tache5, Pardis Biglarbeigi1, Matthew Manktelow2, Aaron Peace2,6

- Faculty of Computing and Engineering, Ulster University, Northern Ireland, UK

- Northern Ireland Centre for Stratified Medicine, Ulster University, Londonderry BT47 6SB, Northern Ireland, UK

- Division of Rural Health and Wellbeing, University of Highlands and Islands, Scotland, UK

- Emergency Department, Letterkenny University Hospital, Donegal, Ireland

- Dept. of Automatic Control and Systems Engineering, University Politehnica of Bucharest, Romania

- Cardiology Department, Western Health and Social Care Trust, Altnagelvin Hospital, Londonderry, Northern Ireland, UK

Abstract

This ‘point-of-view’ paper outlines the sub-disciplines of artificial intelligence (AI) and discusses a series of opportunities for AI to enhance a typical pathway of a primary percutaneous coronary intervention service. The paper outlines AI applications in interventional cardiology from diagnosis (AI based cardiac diagnostics), to treatment (AI in the Cath-lab) and recovery (AI based patient rehabilitation, patient monitoring and automated clinical feedback). AI applications at the diagnostic phase can include the use of machine learning to assist in clinical decision making, for example when triaging patients, reading 12-lead electrocardiograms to auto-detect coronary occlusions (not just ST elevation myocardial infarctions or STEMIs) or predicting patient mortality. Opportunities for AI applications at the peri-intervention stage can include computer vision to aid angiographic analysis, which comprise of automatic tracking of coronary arteries and anatomy to automated thrombolysis in myocardial infarction (TIMI) and syntax scoring systems (an angiographic tool) which can be used to grade complexity of coronary artery disease by grading 11 types of lesions including (Total occlusion, Trifurcation, Bifurcation, Aorto, Dominance, Length, Heavy calcification, Thrombus, Diffuse disease, Number of diseased segments, Tortuosity). Post-intervention AI opportunities might involve intelligent monitoring systems to track patients and AI chatbot technologies to provide personalized coaching for cardiac rehabilitation to improve recovery and reduce readmissions. Automated activity logging in the Cath-Lab using internet of things systems, sensors and cameras could also be used in the post-intervention phase to provide clinical feedback to cardiology teams showing important trends, associations and patterns during procedures. Whilst these opportunities exist, there are also non-technological challenges such as regulatory and ethical challenges related to the deployment of AI systems.

Artificial Intelligence

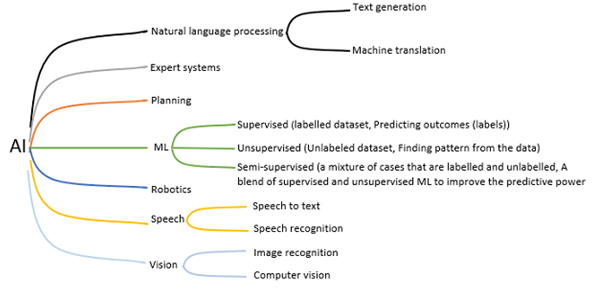

Artificial intelligence (AI) has been referred to as the new electricity and has become a key focus for the future of digital medicine due to its promise for enhancing and expediting patient diagnostics and treatment. Whilst AI has become the leading term in the third and fourth industrial revolutions, other equivalent terms such as intelligent systems, augmented intelligence and computational intelligence still exist. AI research aims to build computer programs that can undertake tasks that would traditionally require human intelligence to complete. However, defining and measuring intelligence is difficult. AI is arguably complementary to human intelligence (given that AI machines have a greater capacity for working memory [RAM] whilst processing millions of probabilistic rules whereas humans have greater autonomy for transfer learning [e.g. using knowledge of draughts to learn chess] and applying intuition with new unseen scenarios). With this in mind, it seems inappropriate to imitate human intelligence on a computer if AI naturally enhances and complements human intellect. The field of AI represents a number of subsidiary topics including; 1) machine learning; 2) natural language understanding; 3) computer vision; 4) robotics; 5) knowledge engineering and 6) planning (refer to Figure 1). One of the main drivers for the growing interest in AI has been machine learning and deep learning (subset of machine learning). Machine learning comprises of many techniques that require large datasets and computational power to use these datasets to ‘machine learn’. Machine learning can be divided into, 1) supervised machine learning; 2) unsupervised machine learning and 3) semi-supervised machine learning.

Supervised machine learning involves training an algorithm using a labelled dataset, for example to use multiple variables to predict an outcome variable. Supervised machine learning uses the independent variables or features to align and predict the known validated outcome in the training dataset. The algorithm can then be used to predict outcomes when considering live patient cases. Unsupervised machine learning is normally used when the dataset does not have a label or outcome variable. Unsupervised machine learning is often used to elicit new knowledge from an unlabeled dataset, for example to discover new association rules between variables (looking for antecedents and consequents) or to uncover homogenous clusters of patients based on a series of characteristics (variables). However, to avoid confusion, unsupervised machine learning can be used with datasets that have so-called ‘labels’ such as the diagnosis or mortality, for example, this label itself can be a feature in a clustering algorithm in order to uncover associations and characteristics between ‘clusters’ that are dominated by a specific ‘label’. Semi-supervised machine learning is often used when a dataset has a mixture of cases that are labelled and unlabeled. This type of technique uses the unlabeled cases to increase the resolution of the feature space and to refine the decision boundary in a predictive learning algorithm often resulting in higher performance. Semi-supervised machine learning is underutilized yet it is very useful given it can be costly to annotate cases or to create a gold standard outcome label for all patient cases. The reason for summarizing machine learning in this paper is that the general area of machine learning does transcend across a number of other sub-areas of AI as shown in figure 1, including natural language processing (where the machine seeks to process and understand speech and text) and computer vision (image processing where the machine can classify objects from an image captured using a camera, e.g. automatically interpreting medical images such as angiograms). There are many techniques within machine learning but the leading technique in recent years has been deep learning which is a multilayer neural network. Deep learning is not a new technique but is one that outperforms other techniques when large patient datasets are available. This has become a focus for AI whereas the early application of AI in medicine involved symbolic reasoning and knowledge engineering which entailed codifying medical expertise into rules to provide assistive expert systems [1]. Machine learning however has a major disadvantage given it normally only learns using a large number of examples or patient cases as opposed to humans who can learn from a smaller number of cases or indeed from single experiences (single shot learning). Humans can do this by creating cognitive heuristics, e.g. humans can often learn from a smaller number of archetypical examples in a textbook or from experience and extrapolate beyond that. For this and other reasons, there remains a quest for a master algorithm that can reason based on limited cases and afford transfer learning to other domains [2]. However, whilst humans can learn from a smaller number of examples when compared to a machine, humans require a time-consuming educational experience to become competent in a sophisticated domain such as medicine.

Figure 1. AI sub-areas and applications.

Interventional Cardiology

A heart attack is when a thrombus totally obstructs blood from freely flowing through a coronary artery thus depriving myocardial muscle of nourishment which leads to irreversible cell death and possibly patient mortality or heart failure. Coronary heart disease is the leading cause of heart attacks and those individuals deemed to be at very high risk of a heart attack have a 10% risk of death within 10 years. Importantly those patients who have recurrent events have significantly higher mortality rates. To attenuate any further damage, a stent is minimally invasively inserted into the culprit artery and a balloon-tipped catheter is used for reperfusion therapy. Whilst this procedure has now been well established, there remains opportunities for AI to enhance these services and to help reduce recurrent Major Adverse Cardiovascular Events (MACE). Currently clinicians rely on score charts such as GRACE (Global Registry of Acute Coronary Events) or TIMI (Thrombolysis in Myocardial Infarction) tools to predict who is most susceptible. GRACE is a tool that uses eight variables (e.g. age, systolic blood pressure, heart rate, ST-segment deviation, signs of heart failure, creatine levels, cardiac arrest on admission, a positive cardiac biomarker) to estimate the risk of patient death after acute coronary syndrome. TIMI scoring is a similar approach except it has a number of tools, one tool is specific for predicting mortality risk for patients with NSTEMI/unstable angina and one tool is dedicated to predicting mortality risk for STEMI patients. The TIMI tools are point based systems that are summative based on a series of simple polar (yes/no) questions. TIMI grade flow is another tool (in the form of a taxonomy) that is used during angiography to grade the blood flow in the coronary arteries from grade 0 (no perfusion) to grade 3 (full perfusion). Some studies show that among patients with normal TIMI (TIMI3 flow) after PPCI, there are still some patients with low perfusion at the myocardial tissue level, and therefore low Myocardial Blush Grade (MBG). MBG is another taxonomy (from grade 0 to grade 3) that is used to grade the level of myocardial blushing or staining that persists in microvasculature regions as shown on an angiogram as contrast flows in and out from the arteries. It is shown that patients with low MBG have higher mortality rates [3,4]. In one study, 11% of 924 patients with normal TIMI (TIMI3) had low MBG (MBG 0 or 1). In a follow-up of 16± 11months, the survival rate of these patients was significantly lower than patients with high MBG [3]. Although MBG is shown to be an effective indicator of mortality in patients with normal TIMI, it highly depends on the observer judgement and is coarsely scaled [5]. Additionally, angiographic images are required to be of a certain level of quality in order to be analysed for MBG by an observer. In a study of 1610 angiographic images, 40% (644 angiograms) of the images were not ‘analysable’ for MBG [6,7]. This can be due to diaphragmatic attenuation or image quality. Similarly, there is also a need to curate, audit and verify the quality of angiographic data that would be used to train an AI algorithm to measure MBG. Nevertheless, there is an opportunity to develop quantitative and automated tools to help assess myocardial perfusion in consecutive frames of angiograms. Moreover, such scores cannot reliably identify all patients at risk of a heart attack or indeed a recurrent event. Apart from the lack of a single non-invasive definitive predictive test, there is also no standardised approach for the management of patients at very high risk of a heart attack or for those individuals that suffered such an event. This is largely due to patient and doctor preferences. Patients often do not comply with the advice or medication given and variability also exists in medications prescribed by different doctors. These factors have far reaching consequences for patient outcomes and patient clinical care pathways as well as for the health service and global economy. There is a clear need for a better personalised approach in order to apply effective prevention. There is huge potential for AI to address such clinical needs.

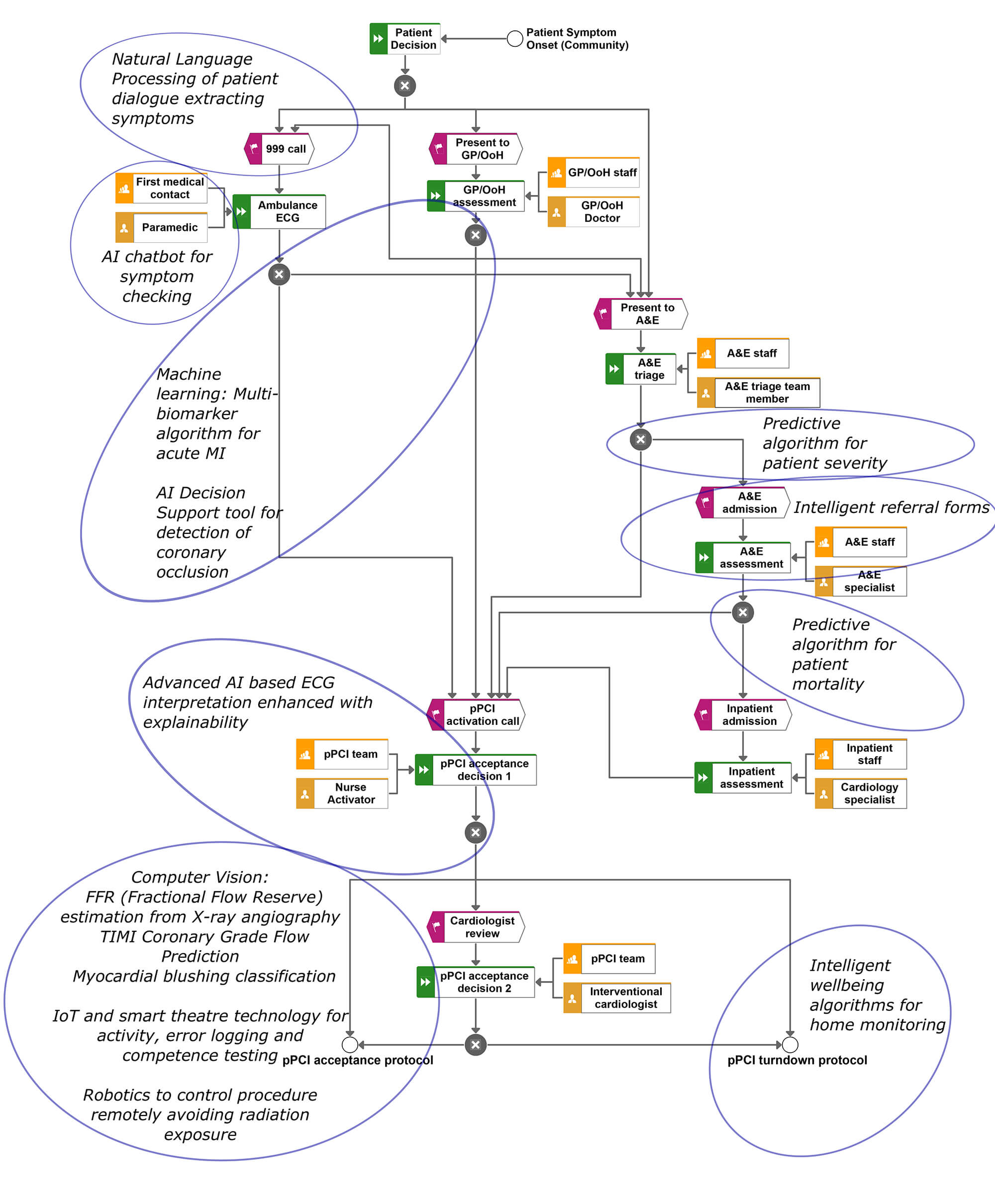

Figure 2. Typical patient pathway for a primary percutaneous coronary intervention service based at Altnagelvin Hospital, Northern Ireland accompanied by example AI ideations that could be implemented to augment or enhance the pathway.

Opportunities and Challenges

Having summarised some general aspects of AI, it is inevitable that this domain will have an impact in interventional cardiology. Figure 2 presents a typical pathway for a PPCI service and illustrates points in the pathway where clinical decisions are taken. The figure also provides some annotations to highlight some opportunities where AI could be used to enhance the service.

AI for ECG interpretation and STEMI detection

The pathway towards a cardiac intervention such as angioplasty (undertaken in a PPCI service via a Cath-Lab) will involve the human interpretation of the 12-lead electrocardiogram (ECG). The ECG is an inexpensive test which still remains one of the most prevalent diagnostic tools used today in medicine. The ECG presents the electrical activity of the heart allowing the clinician to detect cardiac problems. Along with other symptoms, the ECG is the main tool used to diagnose patients with acute myocardial infarctions (acute MIs) prior to minimally invasive cardiac interventions. However, a large number of studies report that clinicians are sub-optimal in correctly reading ECGs [8]. Interestingly, one of the first applications of AI in medicine was the automated interpretation of ECGs with research dating back to the 1960s [9]. Since then the automated interpretation of the ECG has struggled to outperform humans, however, research shows that the human does better when assisted by the algorithm since the computer and the human can make different errors [10]. These early algorithms were mainly developed using knowledge engineering and symbolic rule-based programming [11]. However, deep learning has recently been used in a number of studies to automatically interpret ECGs [12]. Some studies have even used photographs of the ECG as input to the algorithm which bypasses the need to access heterogenous proprietary ECG storage formats in order to access raw signals. The algorithms used to detect acute MI are mainly based on simplified criteria such as STEMI. The opportunity herein is to use deep learning to predict occluded arteries as opposed to STEMIs. In other words, deep learning can utilize the entire ECG (not just S-T changes) to predict occluded arteries as confirmed by angiography. The data required for this type of study would include the diagnostic ECG used for all patients referred to PPCI and all associated angiograms. The challenge is that the dataset from NSTEMI patients would ideally need to include angiograms recorded with the same ECG-angiogram times as the STEMI group. This would be a major contribution to the field given that a large number of NSTEMI patients have occluded arteries that are not manifested on the ECG and their prognosis is often not promising perhaps partly due to the delayed elective treatment. Nevertheless, a decision support algorithm can be enhanced using both the ECG and other data including symptoms and biomarkers.

AI for electrode misplacement detection and data cleaning

Arguably, AI algorithms could be used during ECG acquisition to detect lead misplacement which is a known problem that could mislead the human or any machine based decision support [13]. Lead misplacement algorithms can be developed by recording correct and incorrect ECGs from patients that can then be used to train an algorithm to discriminate between these labels (correct and incorrect). However, given that many researchers have body surface potential maps (BSPMs) [14] that consist of ECG leads that are simultaneously recorded from multiple sites on a patient torso, this data is ideal for developing lead misplacement algorithms. Nevertheless, both humans and any AI systems can only perform based on the data that it is given, hence there is a need for an AI system to automate data quality and data cleaning procedures prior to AI based decision making. Perhaps even a simple interactive checklist or toolkit would be useful to help the staff collect high quality data.

AI to discover and use multiple blood biomarkers

AI can also be used to combine ECG features with a myriad of blood biomarkers that can be rapidly acquired to detect acute myocardial infarction. If a large number proteins are recorded for a large number of healthy, STEMI and NSTEMI subjects at the time of PPCI referral (with immediately confirmed angiographic findings) then this can be used by AI to discover new biomarkers. For example, exploratory data science and unsupervised machine learning can be used to discover new biomarkers and supervised machine learning can be used to build an algorithm to combine multiple weak biomarkers and ECG features to predict acute MI [15]. It may also be possible to use intelligent data analytics to identify biomarkers that would help further sub-divide the NSTEMI group and to identify NSTEMI patients who have full occlusions without any collateralization.

AI explainability and transparency

What is clear in figure 2, is that there is an opportunity for a range of decision support tools to guide clinicians in the PPCI pathway. However, these AI tools should ideally be based on a combination of knowledge engineering of current diagnostic criteria and machine learning. All algorithmic decisions should also provide a form of explainability. This is challenging for deep learning algorithms but attention maps (heat map showing what the AI looked at on the ECG) can be used to at least visualise what areas on the ECG the AI attended to immediately prior to making its decision. Interestingly, this provides an opportunity to compare AI attention with human attention captured using eye tracking. In this regard, all AI algorithms should be accompanied with an explanation user interface (EUI) where the rationale for AI-based decisions are made transparent and interrogatable which yields trust. Providing EUIs also mitigates automation bias. Automation bias is when the clinician naively trusts and accepts any advice given by a machine [16]. A recent study showed that humans perform better when AI-based ECG interpretation is accompanied with a ‘low’ machine uncertainty index [17]. However, there remains an opportunity to develop a reliable uncertainty index which could be a composite variable combining metrics such as signal-to-noise ratios, probabilities and expected class accuracies of the algorithm.

AI for risk stratification and predicting mortality

In addition to AI based ECG interpretation, machine learning could be used to triage patients by predicting the likelihood of mortality or indeed the severity of the referral case. For example, retrospective data on gender, age, level of chest pain, the door-to-balloon time, ECG-to-balloon time, automated ECG interpretation, cardiologist interpretation, patient co-morbidities, past cardiac events etc. and the label of mortality (1 or 0) can be used by a machine learning algorithm to predict mortality [18]. Studies have used machine learning for risk stratification and for more reliable Thrombolysis in Myocardial Infarction (TIMI) scoring [19]. Whilst these are opportunities for AI at the pre-intervention stage, AI can also be used at the peri-intervention stages, which can involve the use of computer vision and robotics.

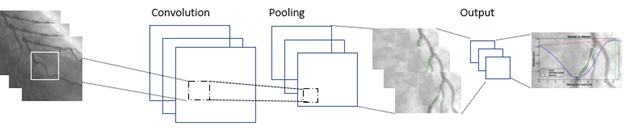

AI for computer vision and robotics

Computer vision as shown in figure 3 can be used to enhance angiographic analysis [20]. This involves the automated segmentation of the angiogram to detect arteries and other anatomy. This is challenging since the heart is dynamic and moves with each heartbeat. Once the arteries are detected, AI algorithms can auto-detect occlusions and areas at risk. In addition, other computer vision techniques could be used to objectively classify any myocardial blushing, which might be useful for predicting mortality, sustained heart failure or reoccurrence [21]. In addition, mechanical robotics could also be used to allow the operator to carry out coronary interventions remotely which could mitigate the harmful effects of radiation [22]. Given planning is another sub-topic of AI which for example is most popularly used for journey planning on Google Maps, AI planning techniques could also be used to provide an optimal series of steps for a given personalised procedure. This is particularly viable for mission rehearsal systems [23]. Of course, one can imagine a more distant future where an autonomous robot might carry out the minimally invasive interventions without human assistance but this is not the ‘near future’.

Figure 3. Analysing angiographic images using computer vision (this is a popular computer vision technique known as a convolutional neural network which is often used for classifying images or object detection in images).

AI for behavior analysis in theatres

But whilst humans undertake the procedures or whilst they are assisted with AI, the use of cameras, sensors and internet of things can be used to automatically log activities in the Cath-Lab which can facilitate tracking of competence and provide insight into procedural patterns and even provide predictions for medical errors [24]. At a minimum, activity logging can be used for data mining for quality improvement and improvement science endeavors within interventional cardiology. The automated activity data could also be used to provide automated feedback to cardiology teams allowing for trend analysis facilitated by visual analytics and dashboards. Another example is where a multitude of activity log data might be used to discover associations between frequency of in-theatre events and the outcomes (outcome could be a successful intervention, re-admission or patient mortality).

AI for monitoring cardiac patients

There might also be an opportunity for AI at post-intervention. For example, wearable technology inbuilt with intelligent algorithms could be used to monitor patients for their health and wellbeing whilst also using chatbot technology to coach the patient through cardiac rehabilitation and reablement. The latter might also help mitigate reoccurrences and promote healthy living and self-care. The monitoring devices might also use a form of machine learning or regression to derive unrecorded ECG leads that provide additional data about the patient. For example, a proof of concept system has been developed to record a 12-lead ECG via a smart phone and use coefficients to estimate the entire BSPM [25]. This in turn could be used to provide an inverse solution where the clinician can view the estimated electrical potentials on the epicardium which can help localize cardiac problems (e.g. local ischemia or problems related to electrical conduction).

Natural language processing

Natural language processing (NLP) is perhaps the least useful in PPCI. However, in addition to the use of chatbots for coaching patients post-treatment, NLP could be used to analyze speech in emergency phone calls to understand speech patterns for different types of patients (for example certain features may correlate with different diseases such as stroke) or to simply better understand emergency phone calls and conversations from patients with chest pain or heart problems. NLP can also be used to analyze free text that might be inserted into a PPCI referral form by coronary care nurses or a cardiologist. Using text analytics to understand these notes might allow us to understand patient archetypes and help develop new taxonomies to help classify patients, as well as the discovery of new associations between patient ‘variables’ that only implicitly exist in ‘free text’. Moreover, chatbot technologies could be used to streamline the referral process to standardize the recording of symptoms and patient data etc. This might provide a better user experience in comparison to using paper or even digital forms. Finally, voice-based chatbots akin to smart speakers (e.g. Amazon Alexa) might make useful ‘hands-free’ decision support tools in the CathLab that act as virtual assistants, but specific applications remain to be seen. However, many of these NLP propositions are not likely to be prioritised in comparison to other aforementioned applications and might even be too error prone.

Conclusions and Discussions

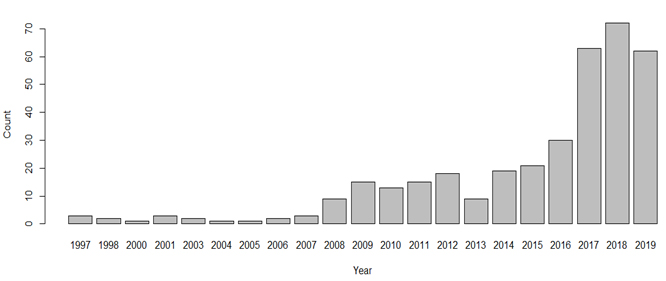

Figure 4 shows the recent trend of AI research in cardiology (number of papers that have the terms ‘AI’ and ‘cardiology’ in the title of papers over the past 20 years according to the Institute of Electrical and Electronics Engineers Xplore digital library). There are clear opportunities to apply AI technology to enhance the PPCI pathway. By 2021, ~50% of hospitals plan to invest in AI because they realise that AI can increase efficiencies and reduce costs [26]. A common question is ‘how much data is needed’ to build an AI system? Unfortunately, there is no exact science to answer this. In supervised machine learning, a number of features/variables are used to predict an outcome/label. When training a machine learning algorithm, you obviously require a lot more cases (rows) than features (columns). A general heuristic is the ‘one in ten rule’ where there should be 10 cases per feature. Put clearly, you need 10 times more cases (rows) than features (columns) according to this simple rule. However, this heuristic is not necessarily useful. For example, if we had 10 binary features (each feature simply storing a 1 or 0, e.g. yes or no values) then we would need 210 (1024) cases to have just one representation for each permutation (combination) of the 10 features. One also needs to consider the number of classes in the outcome variable and whether there is a sufficient number of cases in the dataset for each class. Another common question is: ‘what is the required accuracy for AI systems?’. This question would need to be considered on a case by case basis. Where possible, each AI system should be benchmarked against human expert performance. And regardless of the ‘required’ accuracy, it is important to 1) inform the user of how well the AI system is expected to perform and 2) to understand if the AI system makes different types of mistakes than humans make for the respective scenario. The first point is of particular importance as many automated ECG algorithms simply present diagnoses to consider and do not inform the user of how accurate the algorithm typically is for this type of case/class/diagnosis. In the distant future, the training of machine algorithms might be expedited using quantum computing, an area known as quantum enhanced machine learning [27]. This would allow algorithms to learn using all available data at a faster rate, perhaps close to ‘real time’. According to Solenov et al. [28], algorithms might use all data available including the most recent data and recent patient cases to train an algorithm in real time that can in turn be used by a physician at the point of care. However, real time training and use will be a new challenge for medical device regulation but might be ethically obliged if ‘real time’ quantum training of algorithms improves decision making and patient outcomes. However, a lot of the research to date is theoretical and its application to medicine might be in the distant future.

Figure 4. Number of papers that have the terms ‘AI’ and ‘cardiology’ in the title of papers over the past 20 years according to the IEEE Xplore digital library.

There are a number of non-technology challenges that also need addressed. This includes user adoption of AI in clinical cardiology and any current generational differences that might exist. Only 50 percent of hospital decision makers are familiar with the concept of AI [23]. Simple onboarding activities might entail AI literacy courses for healthcare professionals to increase the likelihood of accepting and trusting AI technologies. Moreover, there may be a need to consider new regulatory procedures and procurement procedures for responsibly assessing, deploying and adopting AI technologies that are transparent, explainable, interrogatable and evidence based. In the longer term, there will also be ethical challenges regarding autonomous AI systems and as to who or whom is responsible and liable for intragenic errors. We also believe that the usability and user experience design of AI technologies will be an important factor for its democratization, and that new human-centered AI methodologies and human-AI interaction best practices should be followed when developing these technologies.

Conflict of Interest

Nothing to declare

This work is supported by the European Union’s INTERREG VA programme, managed by the Special EU Programmes Body (SEUPB). The work is associated with the project – ‘Centre for Personalised Medicine – Clinical Decision Making and Patient Safety’. The views and opinions expressed in this study do not necessarily reflect those of the European Commission or the Special EU Programmes Body (SEUPB).

Our mission: To reduce the burden of cardiovascular disease.

Our mission: To reduce the burden of cardiovascular disease.