Structured risk assessment and -stratification is an essential part of modern cardiology. European Society of Cardiology (ESC) Guidelines for Preventive Cardiology (1) recommend the use of formal stratification tools to classify subjects according to their risk of first or subsequent CVD events, as well as for patients with established cardiovascular disease (CVD), including ST-elevation myocardial infarction and atrial fibrillation (AF) (2,3). It is then recommended to start treatment or modify treatment intensity, according to estimated risk.

Despite these recommendations, it has been observed that risk assessment tools ‘are not adequately implemented in clinical practice’ (1). One might speculate on the reason why, but it seems quite natural, that the performance of recommended instruments is a relevant factor. For example, the concordance- (C-) statistics for the CHA2DS2-VASc score for the prediction of ischemic stroke in AF (4), and the SMART score for the prediction of the 10-year risk of vascular complications in patients with CVD (5) did not exceed 0.70, which can be classified as ‘modest’. Other risk scores, such as the GRACE score for death/myocardial infarction after acute coronary syndrome admission, have better discriminatory power (C-statistic 0.73-0.77) (6), but there definitely is room for improvement.

Multiple factors contribute to (variations in) the performance of risk prediction instruments, including, but not limited to, the population and endpoint of interest, the sample size, the number of potential predictive variables, and the analytic complexity of the data. Risk stratification tools in cardiology are usually derived from classical regression analyses on (large) routine clinical practice data sets. The outcome to be predicted (‘dependent’ variable Y) is then modelled as a function of a series of selected, predefined predictor (or ‘independent’) variables (X). This supervised approach is straightforward from a statistical point of view, and results in a transparent model. The relations between the Xs and Y are estimated by regression coefficients (‘betas’) that can easily be understood by the end users of the model.

Goldstein et al. recently argued that the field should move beyond these regression techniques, and apply machine learning (ML) instead, ‘to address analytic challenges’ (7). They argue that the performance of ‘classical’ regression is suboptimal in case of non-linear X-Y relationships, dependency of X-Y relationships on other X’s, and in case many X’s are present. Indeed, ML techniques, such as ridge regression and LASSO regression, or the method of the ‘Nearest Neighbour’, are useful alternatives to overcome these challenges (7). My personal view is that risk assessment in cardiology of ML techniques go hand-in-hand with the exploration of ‘Big Data’.

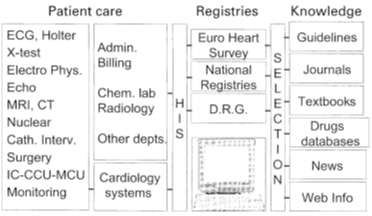

Reproduced with permission from Simoons et al. Eur Heart J 2002;23:1148-52

Our mission: To reduce the burden of cardiovascular disease.

Our mission: To reduce the burden of cardiovascular disease.