Authors

Joana Maria Ribeiro1,2, MD, MSc; Thijmen Hokken1, MD; Patricio Astudillo3, PhD; Rutger Jan Nuis1, MD, PhD; Ole de Backer4 MD, PhD; Giorgia Rocatello3, PhD; Joost Daemen1, MD, PhD; Nicolas M Van Mieghem1, MD, PhD; Paul Cummins1, Msc; Matthieu de Beule3, PhD; Joost Lumens5, PhD; Nico Bruining1, PhD; Peter PT de Jaegere*1, MD, PhD

- Department of Cardiology, Thoraxcenter, Erasmus Medical Center, Rotterdam, The Netherlands.

- Department of Cardiology, Centro Hospitalar e Universitário de Coimbra, Coimbra, Portugal

- FEops NV, Ghent, Belgium.

- Department of Cardiology, Rigshospitalet University Hospital, Copenhagen, Denmark.

- CARIM School for Cardiovascular Diseases, Maastricht University Medical Center, Maastricht, The Netherlands.

Abbreviations

AI – Artificial intelligence

CT – Computed tomography

DL – Deep learning

LAA – Left atrial appendage

LV – Left ventricle

ML – Machine learning

RCT – Randomized controlled trial

TAVI – Transcatheter aortic valve implantation

Abstract

In the current era of evidence-based Medicine, guidelines, and hence clinical decisions, are strongly based on the data provided by large-scale randomized clinical trials. Although such trials provide valuable insights on treatment safety and efficacy, their extrapolation to the real-world practice is often hindered by the strict inclusion and exclusion criteria. Precision Medicine calls for a patient-centred clinical management, focusing on the unique features of each individual, and is of particular Importance when invasive procedures are being considered. When applied to Transcatheter Interventions for Structural Heart Disease, Precision Medicine implies the selection of the right treatment for the right patient at the right time. Artificial Intelligence and Advanced Computer Modelling are powerful tools for a patient-tailored treatment delivery. In this review, we reflect on how such technologies can be used to enhance Precision Medicine in Transcatheter Interventions for Structural Heart Disease.

Introduction

The premises of treatment from a patient and physician’s perspective are that the proposed treatment is safe and effective and preferably associated with the lowest possible physical and/or emotional burden. In interventional cardiology, safety can be defined by the absence of peri-procedural complications such as death or stroke but also those from which the patient may not suffer directly, such as conduction abnormalities. Effectiveness relates to short- and long-term clinical outcomes including device performance and the eventual repair of adverse cardiac remodelling that has occurred as a result of the (longstanding) valve disease in case of valve interventions and the absence of stroke in case of left atrial appendage (LAA) occlusion. In addition to these clinical and quality-oriented outcome measures, health care authorities also require treatment to be affordable and deliverable (i.e. access). In other words, treatment has to be accurate, patient-tailored and embedded in their social environment. The latter is easy to address by engaging the patients and their families into the decision-making process. To define the most accurate treatment is much more complex and demands a new way of thinking.1

Evidence-based medicine is the foundation of contemporary clinical practice and heavily relies on the findings of randomized controlled clinical trials (RCT). However, patients included in RCT’s most often do not represent the patient seen in one’s practice due to the in- and exclusion criteria. Moreover, the selection criteria only partially capture the complex reality of the patient, as many patient-related features are not considered or cannot be accounted for. This justifies the call for Precision Medicine that is defined by: “treatments targeted to the needs of an individual patient on the basis of genetic, biomarker, phenotypic or psychosocial characteristics that distinguish a given patient from another patient with a similar clinical presentation”.2,3

Precision Medicine is possible with the advent of powerful computer systems that are capable of storing and analysing large datasets in addition to the application of refined algorithms allowing the prediction of outcome clinically (i.e. prognosis), technically (i.e device/host interaction and, thus, valve performance) and/or physiologically (e.g. intra-cardiac haemodynamics, recovery of left ventricular [LV] function). Digital transformation of health care may therefore be expected to improve safety and efficacy but also efficiency by reducing the time of decision making while at the same time enhancing the quality of the treatment decisions (utility vs futility) and thus enhance cost-effectiveness. The purpose of this paper is to reflect and demonstrate how artificial intelligence (AI) and advanced computer modelling can be applied in transcatheter interventions for structural heart disease and how it will affect our clinical practice.

Digital Health and Artificial Intelligence

Digital health has been defined as the use of digital technologies for health. The digitalization of medical records, telemedicine, patient monitoring through mobile devices (mobile health), remote recruiting of volunteers for research studies are examples of digital health applications in clinical practice.4-7 AI, however, might be the ultimate example of avant-garde digital technology applied to health care.

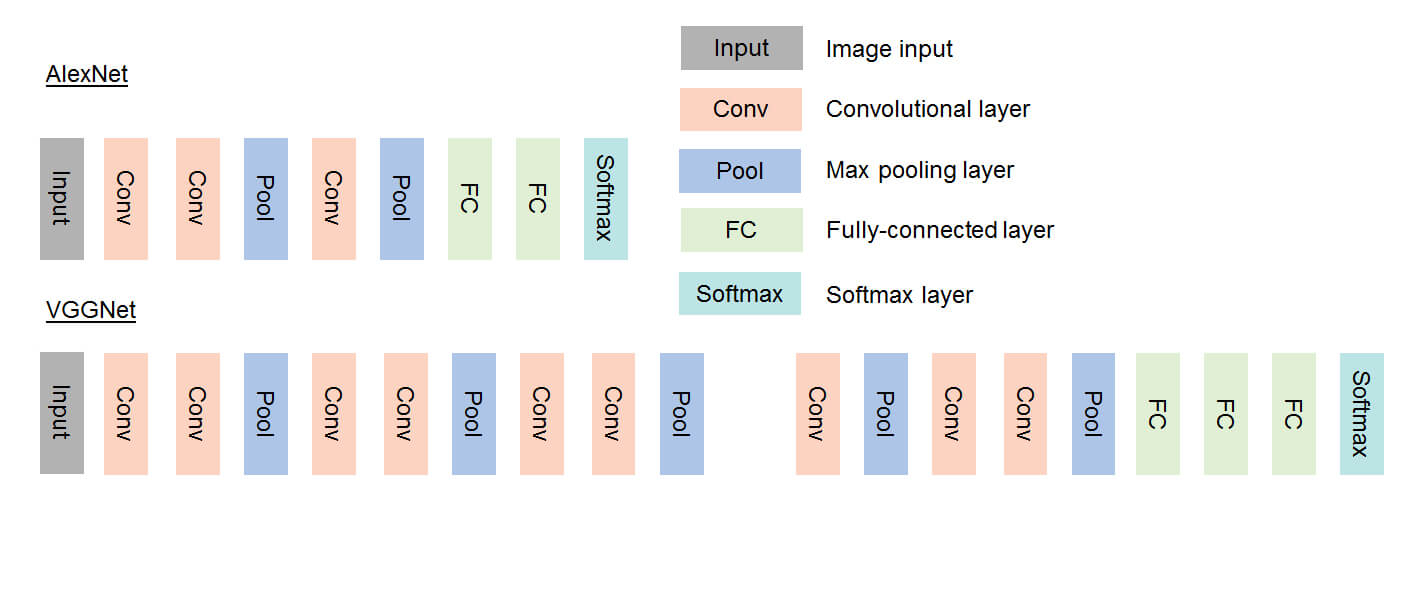

Artificial Intelligence is defined as the capability of a machine to analyse and interpret external data and learn from it in order to achieve goals through flexible adaptation.7-11 It stems from an initiative taken at the Dartmouth Conference in 1953 during which scientists proposed the development of computers able to perform tasks that require human intelligence. Soon thereafter followed the concept of Machine Learning (ML, 1959), to develop mathematical methods for classification and was first applied for linear problems (data separation by a straight line). However, for non-linear problems, neural networks, which used a small stack of densely connected layers, were introduced. These neural networks yield a shallow network, where the input signal flows through the layers until the output. By using multiple different layers (for example, the convolutional layer) and thus enlarging the stack of layers, deeper networks were developed, introducing the concept of Deep Learning (DL). DL was stimulated with the advent of graphic processing units (GPU) used for the gaming industry, which are processors capable of processing large amounts of floating-point arithmetic in parallel. AI and DL, in particular, were further stimulated by cloud platforms such as, for example, Microsoft Azure, enabling the storage of large amounts of data that can be used for development and implementation of AI algorithms.

DL thus requires considerable computing power that is capable of computing with low levels of energy demand. This may be achieved, for instance, by stochastic or probabilistic computing. At variance with conventional computing that operates via deterministically operated systems using information in binary code (0 & 1), probabilistic computing uses probabilistic bits (p-bits) that are classical entities interacting with each other based upon the principle of human neural networks.15

The coupling of DL techniques with modern advanced computer modelling may become the next step in the treatment decision in patients with structural heart disease and the planning of the intervention, thereby stimulating Precision Medicine.

Artificial Intelligence and Advanced Computer Modelling in Clinical Practice

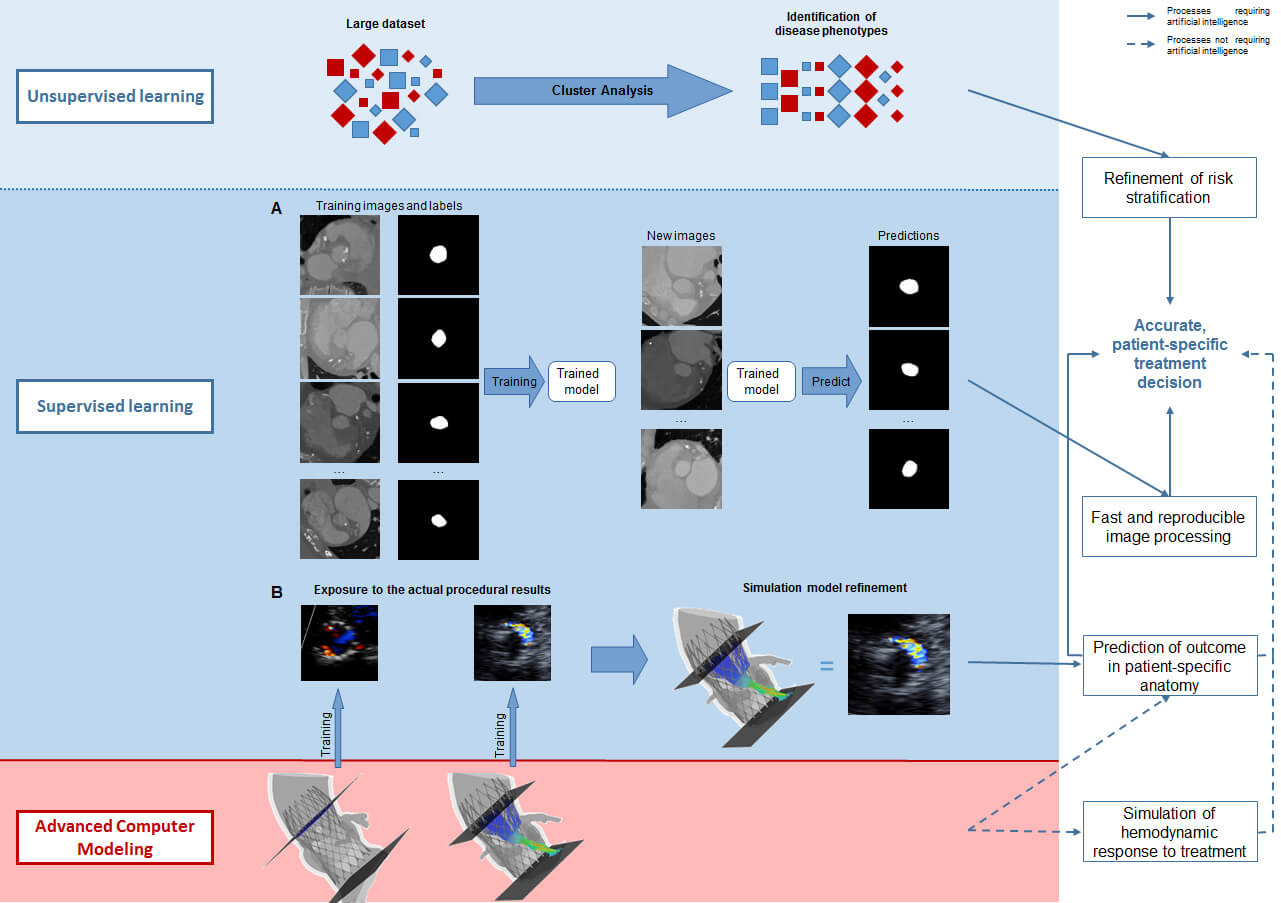

Treatment decision – disease phenotype & prognosis

How could AI help Mrs Jones, who comes to the outpatient clinic because of cardiac valve disease such as aortic stenosis or mitral regurgitation or a combination thereof? Echocardiography provides a series of quantitative measures of her heart (e.g. dimensions of the cavities and myocardium, function [LVEF, strain, …]) and valves (e.g. calcification, anatomic and effective orifice area,…) and possibly MRI may provide information on myocardial fibrosis, regurgitant volume if present, etc.. AI, and in particular cluster analysis, allows the processing of this tremendous amount of data, offering the possibility of identifying those phenotypes associated with a benign outcome that do not warrant treatment at this point versus those associated with an impaired prognosis for which intervention is indicated. Furthermore, Cluster Analysis may identify phenotypes that convey such an advanced disease state that no treatment impacts prognosis thus avoiding futile intervention.

Although these advanced computational techniques have not yet been widely implemented, their feasibility has been ascertained.16-20 ML algorithms have been used to identify different phenotypes of aortic stenosis and suggested that two pathways of disease progression exist and that they may be related to different adaptive mechanisms.20 Primor and colleagues21 used hierarchical cluster analysis based on preoperative clinical and echocardiographic characteristics in patients with severe primary mitral regurgitation in order to define distinct phenotypes with different surgical outcomes. Cluster analysis has also been applied in atrial fibrillation to identify patients at risk for stroke and other adverse events.22,23 Conceptually, it could also be used to understand which anatomic features of the LAA predispose to thrombus formation and/or dislocation from the LAA. Upon further refinement and validation, AI can and will be used for patient-tailored medicine. It is conceivable that Cluster Analysis may identify patients or disease phenotypes that are currently not accepted for treatment (e.g. asymptomatic patients or patients with moderate-severe valve disease and preserved LV function) but may benefit from valve replacement or repair with the primary objective of long-term preservation of LV function.

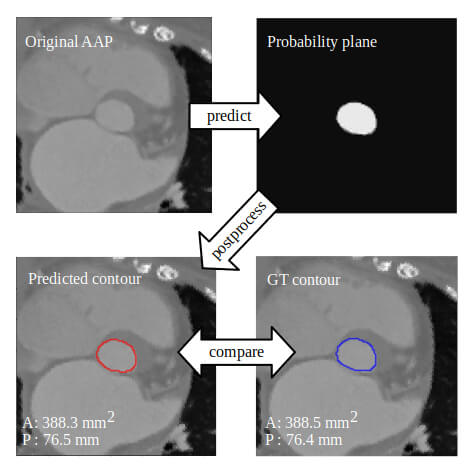

Treatment Planning

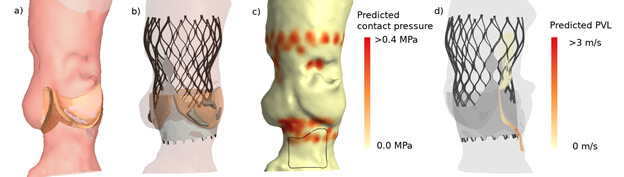

Advanced computer simulation has also been proposed for the surgical and catheter-based interventions of the mitral valve and LAA.36-41 It has been shown to accurately simulate transcatheter edge-to-edge mitral valve repair as well as transcatheter mitral valve replacement.35,38,39 The clinical application of patient-specific simulation in determining anatomic (un)suitability for transcatheter mitral valve replacement (i.e. left ventricle outflow tract obstruction) has been reported40,41. Bavo and colleagues42 validated the first model for the simulation of percutaneous LAA occlusion and reported a good correlation between the simulated outcomes and post-procedural CT imaging. Some of these groups used ML for automation of structure recognition and model creation from the pre-procedural imaging, but have not yet incorporated DL to refine the simulation process.

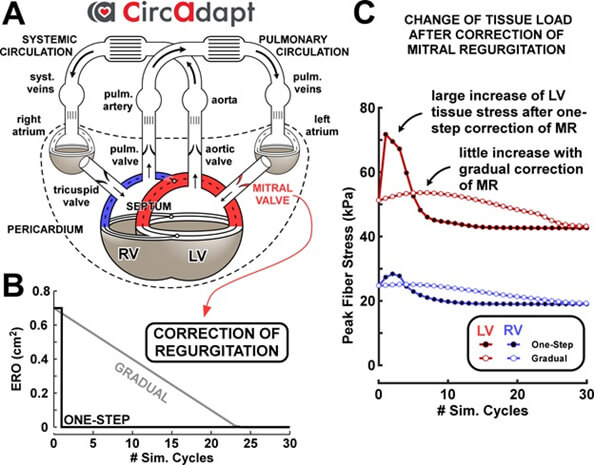

Treatment evaluation – Physiological impact

Summary

As doctors, we interact daily with our patients. We talk to them, experience their doubts, their concerns, their troubles. We eventually come to look at a patient as the person that she/he is, rather just another case file. The medical dilemmas that so often trouble us are inevitabilities of clinical practice that arise precisely from the fact that we want to deliver the best treatment possible to that particular patient standing in front of us. However, our current medical armamentarium does not provide us with a clear answer. The advent of Artificial Intelligence provides us with the possibility of processing large and extensive sets of data in such a way that complex patterns and relationships between variables that would never be accessible to the human eye and mind will eventually come to light. Artificial Intelligence must thus be regarded as the tool that will allow us to deliver true patient-centred healthcare, rather than a technology that will hoard our clinical tasks. Moreover, Artificial Intelligence, coupled with advanced simulation modelling, grants us the possibility of testing an invasive treatment in a patient-specific anatomic setting, thereby predicting which treatment is the most optimal for a specific patient. Advanced computer modelling also allows us to pursue a favourable haemodynamic outcome by allowing us to understand the physiological responses to a given treatment.

Declaration of Interest

The authors state nothing to declare.

Our mission: To reduce the burden of cardiovascular disease.

Our mission: To reduce the burden of cardiovascular disease.